Meet W&L’s AI Fellow: Your Guide to Navigating Artificial Intelligence Sybil Prince Nelson ’01 helps faculty, staff and students discover how to use AI responsibly and effectively — from designing assignments to understanding when human creativity should take the lead.

The rise of artificial intelligence (AI) in our everyday existence is undeniable, but so too is the uncertainty it has caused in a variety of sectors, including the realm of higher education. Recognizing the opportunities and concerns surrounding this emerging technology, Washington and Lee University created a fellowship to address the possibilities and challenges associated with the use of AI.

W&L’s artificial intelligence fellow is charged with assisting the university in educating its faculty, staff and students about applicable and responsible AI use. The university selected Sybil Prince Nelson ’01, P’28 to serve as its first AI fellow because of her combined expertise and wide-ranging perspective on the subject.

An assistant professor of mathematics, Prince Nelson is also the author of numerous science fiction novels for adults and children. Her versatility as both a mathematician and published author gives her an uncommon vantage point on AI’s creative boundaries. Her recent scholarly work included an article published in Faculty Focus titled “Regurgitative AI: Why ChatGPT Won’t Kill Original Thought.”

We recently asked Prince Nelson about her personal AI journey and her role as the campus’s inaugural AI fellow.

Take us through your journey of first deciding to engage with AI and eventually using it throughout your personal and professional life? How do you stay current when it changes so frequently?

The summer after ChatGPT was launched, I was completely unfamiliar with it. I had heard whisperings of this new technology but had not interacted with it at all. That was also the summer I was helping my homeschooled daughter prepare for college applications in the fall. I had homeschooled her since she was in the fifth grade, so I was her teacher, her counselor, her adviser and the headmaster of what I called Nelson Academy. There was so much to organize. I was talking to a colleague from another university, and she said, “Why don’t you just use ChatGPT to help you?” I hadn’t even thought of that. Even though she is in chemistry, she explained that she used it for her essay questions on exams. She would ask Chat to answer the question for her as an undergraduate student to see if she was asking the right thing to elicit the answer she wanted. If not, she would tweak the question slightly. I then gave it a try and asked ChatGPT to make a syllabus for the ninth-grade English class I taught my daughter based on the books I had her read and the assignments I gave her. It completed the task in seconds, and I was hooked. Now, anytime there is a repetitive task or a task that I would delegate to an assistant if I had one, I go to ChatGPT. It does everything from planning my wardrobe to making a weekly meal plan to keeping track of the ages of the characters in my next book. Being a member of W&L’s PLAI Advisory Board helps keep me current. Every time I meet with those brilliant people, I learn a new way to use AI. I also read as much as I can about where the technology is going and how people use it in their daily lives.

What was your initial reaction when you were asked to become W&L’s inaugural AI fellow? What did you see as the most exciting opportunity?

I was so excited. I know how AI has personally affected my life and made me more efficient, and I couldn’t wait to pass on that knowledge.

As W&L’s first AI fellow, you’re essentially creating this position from scratch. How have you defined this role, and what do you see as your primary responsibilities to the university community?

I see my charge as three-fold. I need to stay up to date with what AI can do and how it affects our faculty, staff and students. I need to educate all stakeholders in the W&L community, including alumni and parents, about how W&L is handling this educational disruptor. And I need to help them appreciate what it can do and help them integrate it into their lives in a meaningful and ethical way.

You served as emcee for W&L’s inaugural PLAI Summit in September. What were your goals for that event, and what was your biggest takeaway?

My goal for the entire summit was for people to leave with at least one piece of information that they had not known before. It was a pretty self-selected audience. People there were already interested in AI. So I wanted to make sure that even AI enthusiasts and the AI curious were still able to be engaged. My biggest takeaway was that a liberal arts education is actually best suited to navigate this new world led by AI technology. Liberal arts education is more than just finding a numerical answer or completing a task; it is interpreting, analyzing and answering questions that no one has asked yet.

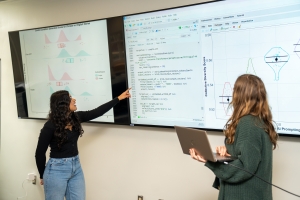

The PLAI Lab (Prompting, Learning and Artificial Intelligence Lab) opened in January as a hands-on space for the W&L community to experiment with AI tools. What’s been the community’s response so far, and what kinds of questions are people bringing to the lab?

The questions we get in the PLAI Lab are usually quite practical: Can you help me design an AI-based assignment? Can AI help me design my syllabus? Can I create a syllabus chatbot? Those are great questions, but I would love for faculty and staff to come to the PLAI Lab just to “play” and see what AI can do in and out of the classroom.

W&L recommends that every course syllabus include an explicit statement about AI use. As the AI fellow, what role do you play in helping the university develop policies around AI?

As the AI fellow, I focus on showing faculty what AI can do so they are aware of what they need to look out for in their classes. Helen MacDermott of the PLAI Advisory Board created a wonderful AI tool that helps faculty step-by-step determine how they want to use AI in their class or not and helps them form the language for their syllabi.

What have you learned in this role that you didn’t expect? What has surprised you most about how the W&L community is engaging with AI?

AI is changing almost daily. I am constantly learning a new thing it can do. For example, I was working on my language learning. I asked ChatGPT to quiz me on the 100 most used Korean words and the first five that I missed would be my focus words for the week. I then asked it to give me progressively more difficult quizzes each day about those five words. Well, the next day, I had an email from ChatGPT saying my daily quiz was ready. I had never asked it to send me an email about the quiz, but it did. I found that amazing … and a little scary. But overall, it’s just amazing. It is acting as a true assistant for me because that is the way I treat it. What has amazed me most about the W&L community is the clear dichotomy between AI sentiment. I find there are people who love it and use it for everything, and then there are people who are completely opposed to it and refuse to go near it. I hope to be able to reach both groups.

You write about being honest with students about when using AI is a disservice because it short-circuits their development. How do you help students navigate this decision-making process?

I think showing the mistakes AI can make and the consequences it incurs helps with the decision-making process. There are people losing their jobs or their places in graduate school due to the misuse of AI. They have to not only cite AI use properly, but also be expert enough in their field to realize when AI is wrong. They have to be completely ready and willing to accept any consequences from blindly relying on AI.

What’s your advice for faculty members who are anxious about AI or unsure how to address it in their classrooms? Where should they start?

I encourage them to think back to how they might have felt with the disruption of Wikipedia or smartphones. Or, if they have been teaching long enough, the internet itself. Yes, at first it was probably scary, and they thought education would never be the same. But now, those are accepted sources of information. Eventually, AI will seamlessly embed itself into our educational landscape. It will make certain things more efficient, allowing us to do more in the classroom than we had been able to before. But in order to get ready for that, they need to start exploring. Join one of my bootcamps and try a few fun prompts to see what it can do.

Beyond environmental impact, what does “responsible AI usage” mean to you in an academic context? How do you help students and faculty think through the ethical dimensions of when and how to use these tools?

I tell students and faculty that AI should be a tool, an assistant or a tutor. You are the expert, not the AI. If you keep AI in its role, then it reduces the possibility of unethical usage. You cannot take the output of a chatbot and present it as truth, especially your truth. The product from an AI needs to be a starting point of a back-and-forth conversation, the output being continuously improved until it gets to a presentable place.

You’ve noted that W&L has “emerged as a leader for other liberal arts universities in terms of defining what it means to be AI competent.” What does AI competence look like at a liberal arts institution?

To me, AI competence means knowing when and when not to use AI. You don’t want to use AI when you want to express your creative voice. Only you have that voice. An AI will not be able to replicate it, and it may stifle your own. When you do use AI, you have to be enough of an expert in the field to question the results. You have to be able to identify errors and hallucinations and be able to fix them.

Sybil Prince Nelson, assistant professor of mathematics and campus AI fellow

Sybil Prince Nelson, assistant professor of mathematics and campus AI fellow

You must be logged in to post a comment.